In this article we’ll discuss how you can block unwanted users or bots from accessing your website via .htaccess rules. The .htaccess file is a hidden file on the server that can be used to control access to your website among other features.

Following the steps below we’ll walk through several different ways in which you can block unwanted users from being able to access your website.

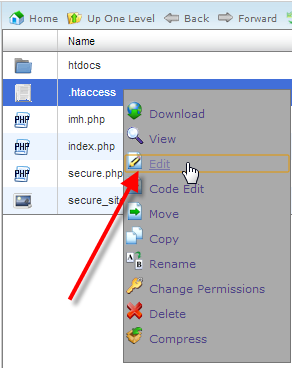

Edit your .htaccess file

To use any of the forms of blocking an unwanted user from your website, you’ll need to edit your .htaccess file.

Block by IP address

You might have one particular IP address, or multiple IP addresses that are causing a problem on your website. In this event, you can simply outright block these problematic IP addresses from accessing your site.

Block a single IP address

If you just need to block a single IP address, or multiple IPs not in the same range, you can do so with this rule:

deny from 123.123.123.123

Block a range of IP addresses

To block an IP range, such as 123.123.123.1 – 123.123.123.255, you can leave off the last octet:

deny from 123.123.123You can also use CIDR (Classless Inter-Domain Routing) notation for blocking IPs:

To block the range 123.123.123.1 – 123.123.123.255, use 123.123.123.0/24

To block the range 123.123.64.1 – 123.123.127.255, use 123.123.123.0/18

deny from 123.123.123.0/24

Block bad users based on their User-Agent string

Some malicious users will send requests from different IP addresses, but still using the same User-Agent for sending all of the requests. In these events you can also block users by their User-Agent strings.

Block a single bad User-Agent

If you just wanted to block one particular User-Agent string, you could use this RewriteRule:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} Baiduspider [NC]

RewriteRule .* - [F,L]

Alternatively, you can also use the BrowserMatchNoCase Apache directive like this:

BrowserMatchNoCase "Baiduspider" bots Order Allow,Deny Allow from ALL Deny from env=bots

Block multiple bad User-Agents

If you wanted to block multiple User-Agent strings at once, you could do it like this:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(Baiduspider|HTTrack|Yandex).*$ [NC]

RewriteRule .* - [F,L]

Or you can also use the BrowserMatchNoCase directive like this:

BrowserMatchNoCase "Baiduspider" bots BrowserMatchNoCase "HTTrack" bots BrowserMatchNoCase "Yandex" bots Order Allow,Deny Allow from ALL Deny from env=bots

Block by referer

Block a single bad referer

If you just wanted to block a single bad referer like example.com you could use this RewriteRule:

RewriteEngine On

RewriteCond %{HTTP_REFERER} example\.com [NC]

RewriteRule .* - [F]

Alternatively, you could also use the SetEnvIfNoCase Apache directive like this:

SetEnvIfNoCase Referer "example\.com" bad_referer Order Allow,Deny Allow from ALL Deny from env=bad_referer

Block multiple bad referers

If you just wanted to block multiple referers like example.com and example.net you could use:

RewriteEngine On

RewriteCond %{HTTP_REFERER} example\.com [NC,OR]

RewriteCond %{HTTP_REFERER} example\.net

RewriteRule .* - [F]

Or you can also use the SetEnvIfNoCase Apache directive like this:

SetEnvIfNoCase Referer "example\.com" bad_referer SetEnvIfNoCase Referer "example\.net" bad_referer Order Allow,Deny Allow from ALL Deny from env=bad_referer

Temporarily block bad bots

In some cases you might not want to send a 403 response to a visitor which is just a access denied message. A good example of this is lets say your site is getting a large spike in traffic for the day from a promotion you’re running, and you don’t want some good search engine bots like Google or Yahoo to come along and start to index your site during that same time that you might already be stressing the server with your extra traffic.

The following code will setup a basic error document page for a 503 response, this is the default way to tell a search engine that their request is temporarily blocked and they should try back at a later time. This is different then denying them access temporarily via a 403 response, as with a 503 response Google has confirmed they will come back and try to index the page again instead of dropping it from their index.

The following code will grab any requests from user-agents that have the words bot, crawl, or spider in them which most of the major search engines will match for. The 2nd RewriteCond line allows these bots to still request arobots.txt file to check for new rules, but any other requests will simply get a 503 response with the message “Site temporarily disabled for crawling”.

Typically you don’t want to leave a 503 block in place for longer than 2 days. Otherwise Google might start to interpret this as an extended server outage and could begin to remove your URLs from their index.

ErrorDocument 503 "Site temporarily disabled for crawling"

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(bot|crawl|spider).*$ [NC]

RewriteCond %{REQUEST_URI} !^/robots\.txt$

RewriteRule .* - [R=503,L]

This method is good to use if you notice some new bots crawling your site causing excessive requests and you want to block them or slow them down via your robots.txt file. As it will let you 503 their requests until they read your new robots.txt rules and start obeying them. You can read about how to stop search engines from crawling your website for more information regarding this.

You should now understand how to use a .htaccess file to help block access to your website in multiple ways.

Originally posted on October 21, 2015 @ 2:11 pm